The Journal of Field Actions, together with Civicus, has just published a special issue “Stories of Innovative Democracy at the Local Level: Enhancing Participation, Activism and Social Change Across the World.” When put together, the 13 articles provide a lively illustration of the wealth of democratic innovations taking place around the world.

Tag Archives for citizen engagement

Now the paper: Evidence of Social Accountability Initiatives

A little while ago I wrote about Jonathan Fox’s work on the evidence of social accountability initiatives. Initially in the format of a PDF slide presentation, it has now been turned into a magnificent paper, the first of the GPSA working paper series. Below is the abstract:

Policy discussion of social accountability initiatives has increasingly has increasingly focused on questions about their tangible development impacts. The empirical evidence is mixed. This meta-analysis rethinks some of the most influential evaluations through a new lens: the distinction between tactical and strategic approaches to the promotion of citizen voice to contribute to improved public sector performance. Field experiments tend to study bounded, tactical interventions that rely on optimistic assumptions about the power of information alone both to motivate collective action and to influence public sector performance. More promising results emerge from studies of multi-pronged strategies that encourage enabling environments for collective action and bolster state capacity to actually respond to citizen voice. This reinterpretation of the empirical evidence leads to a proposed new series of grounded propositions that focus on state-society synergy and sandwich strategies through which ‘voice’ and ‘teeth’ can become mutually empowering.

You can download the paper here: Social Accountability: What does the Evidence Really Say [PDF]. You can also read my take on the main lessons from Jonathan’s work here. Enjoy the reading.

***

PS: I have been away for a while doing field work, but hope to start posting (more or less) regularly soon.

Technology and Citizen Engagement: Friend or Foe?

Within the open government debate, there is growing interest in the role of technology in citizen engagement. However, as interest in the subject grows, so does the superficiality of the conversations that follow. While the number of citizen engagement and technology events is increasing, the opportunities for in-depth conversations on the subject do not seem to be increasing at the same rate.

This is why, a few weeks ago, I was pleased to visit the University of Westminster for a kick-off talk on “Technology and Participation: Friend or Foe?”, organized by Involve and the Centre for the Study of Democracy (Westminster). It was a pleasure to start a conversation with a group that was willing to engage in a longer and more detailed conversation on the subject.

My talk covered a number of issues that have been keeping me busy recently. On the preliminary quantitative work that I presented, credit should also go to the awesome team that I am working with, which includes Fredrik Sjoberg (NYU), Jonathan Mellon (Oxford) and Paolo Spada (UBC / Harvard). For those who would like to see some of the graphs better, I have also added here [PDF] the slides of my presentation.

I have skipped the video to the beginning of my talk, but the discussion that followed is what made the event interesting. In my opinion, the contributions of Maria Nyberg (Head of Open Policy Making at the Cabinet Office) Catherine Howe (Public-i), as well as those of the participants, were a breath of fresh air in the current citizen engagement conversation. So please bear with me and watch until the end.

I would like to thank Simon Burral (Involve) and Graham Smith (Westminster) for their invitation. Simon leads the great work being done at Involve, one of the best organizations working on citizen engagement nowadays. And to keep it short, Graham is the leading thinker when the issue is democratic innovations.

Below is also an excellent summary by Sonia Bussu (Involve), capturing some of the main points of my talk and the discussion that ensued (originally posted here).

***

“On technology and democracy

The title of yesterday’s event, organised by Involve and Westminster University’s Centre for the Study of Democracy, posed a big question, which inevitably led to several other big questions, as the discussion among a lively audience of practitioners, academics and policymakers unfolded (offline and online).

Tiago Peixoto, from the World Bank, kicked off the debate and immediately put the enthusiasm for new technologies into perspective. Back in 1795, the very first model of the telegraph, the Napoleonic semaphore, raised hopes for – and fears of – greater citizen engagement in government. Similarly the invention of the TV sparked debates on whether technology would strengthen or weaken democracy, increasing citizen awareness or creating more opportunities for market and government manipulation of public opinion.

Throughout history, technological developments have marked societal changes, but has technological innovation translated into better democracy? What makes us excited today about technology and participation is the idea that by lowering the transaction costs we can increase people’s incentives to participate. Tiago argued that this costs-benefits rationale doesn’t explain why people continue to vote, since the odds of their vote making a difference are infinitesimal (to be fair voter turnouts are decreasing across most advanced democracies – although this is more a consequence of people’s increasing cynicism towards political elites rather than their understanding of mathematical probabilities).*

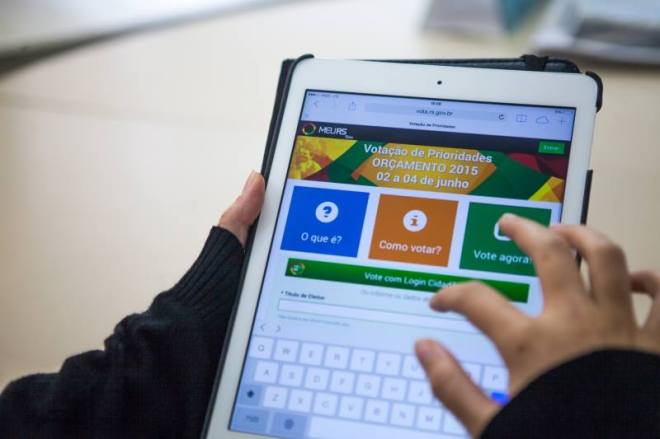

So do new technologies mobilise more people or simply normalise the participation of those that already participate? The findings on the matter are still conflicting. Tiago showed us some data on online voting in Rio Grande do Sul participatory budgeting process in Brazil, whereby e-voting would seem to bring in new voters (supporting the mobilisation hypothesis) but from the same social strata (e.g. higher income and education – as per the normalisation hypothesis).

In short, we’re still pretty much confused about the impact of technology on democracy and participation. Perhaps, as suggested by Tiago and Catherine Howe from Public-i, the problem is that we’re focusing too much on technology, tempted by the illusion it offers to simplify and make democracy easy. But the real issue lies elsewhere, in understanding people and policymakers’ incentives and the articulation (or lack thereof) between technologies and democratic institutions. As emphasised by Catherine, technology without democratic evolution is like “lipstick on a pig”.

The gap between institutions and technology is still a big obstacle. Catherine reminded us how participation often continues to translate into one-way communication in government’s engagement strategies, which constrains the potential of new technologies in facilitating greater interaction between citizens and institutions and coproduction of policies as a response to increasing complexity. As academics and practitioners pitch the benefits of meaningful participation to policy makers, Tiago asked whether a focus on instrumental incentives might help us move forward. Rather than always pointing to the normative argument of deepening democracy, we could start using data from cases of participatory budgeting to show how greater participation reduces tax evasion and corruption as well as infant mortality.

He also made a methodological point: we might need to start using more effectively the vast array of data on existing engagement platforms to understand incentives to participation and people’s motivation. We might get some surprises, as findings demystify old myths. Data from Fix My Street would seem to prove that government response to issues raised doesn’t increase the likelihood of future participation by as much as we would assume (28%).** But this is probably a more complicated story, and as pointed out by some people in the audience the nature and salience of both the issue and the response will make a crucial difference.

Catherine highlighted one key problem: when we talk about technology, we continue to get stuck on the application layer, but we really need to be looking at the architecture layer. A democratic push for government legislation over the architecture layer is crucial for preserving the Internet as a neutral space where deeper democracy can develop. Data is a big part of the architecture and there is little democratic control over it. An understanding of a virtual identity model that can help us protect and control our data is key for a genuinely democratic Internet.

Maria Nyberg, from the Cabinet Office, was very clear that technology is neither friend nor foe: like everything, it really depends on how we use it. Technology is all around us and can’t be peripheral to policy making. It offers great opportunities to civil servants as they can tap into data and resources they didn’t have access to before. There is a recognition from government that it doesn’t have the monopoly on solutions and doesn’t always know best. The call is for more open policy making, engaging in a more creative and collaborative manner. Technology can allow for better and faster engagement with people, but there is no silver bullet.

Some people in the audience felt that the drive for online democracy should be citizen-led, as the internet could become the equivalent of a “bloodless guillotine” for politicians. But without net neutrality and citizen control over our own data there might be little space for genuine participation.

*This point was edited on 12/07/2014 following a conversation with Tiago.

** This point was edited on 12/07/2014 following a conversation with Tiago.”

—————————

I am also thankful to the UK Political Studies Association (PSA), Involve and the University of Westminster for co-sponsoring my travel to the UK. I will write more later on about the Scaling and Innovation Conference organized by the PSA, where I was honored to be one of the keynote speakers along with MP Chi Onwurah (Shadow Cabinet Office Minister) and Professor Stephen Coleman (Leeds).

Social Accountability: What Does the Evidence Really Say?

So what does the evidence about citizen engagement say? Particularly in the development world it is common to say that the evidence is “mixed”. It is the type of answer that, even if correct in extremely general terms, does not really help those who are actually designing and implementing citizen engagement reforms.

This is why a new (GPSA-funded) work by Jonathan Fox, “Social Accountability: What does the Evidence Really Say” is a welcome contribution for those working with open government in general and citizen engagement in particular. Rather than a paper, this work is intended as a presentation that summarizes (and disentangles) some of the issues related to citizen engagement.

Before briefly discussing it, some definitional clarification. I am equating “social accountability” with the idea of citizen engagement given Jonathan’s very definition of social accountability:

“Social accountability strategies try to improve public sector performance by bolstering both citizen engagement and government responsiveness”

In short, according to this definition, social accountability is defined, broadly, as “citizen participation” followed by government responsiveness, which encompasses practices as distinct as FOI law campaigns, participatory budgeting and referenda.

But what is new about Jonathan’s work? A lot, but here are three points that I find particularly important, based on a very personal interpretation of his work.

First, Jonathan makes an important distinction between what he defines as “tactical” and “strategic” social accountability interventions. The first type of interventions, which could also be called “naïve” interventions, are for instance those bounded in their approach (one tool-based) and those that assume that mere access to information (or data) is enough. Conversely, strategic approaches aim to deploy multiple tools and articulate society-side efforts with governmental reforms that promote responsiveness.

This distinction is important because, when examining the impact evaluation evidence, one finds that while the evidence is indeed mixed for tactical approaches, it is much more promising for strategic approaches. A blunt lesson to take from this is that when looking at the evidence, one should avoid comparing lousy initiatives with more substantive reform processes. Otherwise, it is no wonder that “the evidence is mixed.”

Second, this work makes an important re-reading of some of the literature that has found “mixed effects”, reminding us that when it comes to citizen engagement, the devil is in the details. For instance, in a number of studies that seem to say that participation does not work, when you look closer you will not be surprised that they do not work. And many times the problem is precisely the fact that there is no participation whatsoever. False negatives, as eloquently put by Jonathan.

Third, Jonathan highlights the need to bring together the “demand” (society) and “supply” (government) sides of governance. Many accountability interventions seem to assume that it is enough to work on one side or the other, and that an invisible hand will bring them together. Unfortunately, when it comes to social accountability it seems that some degree of “interventionism” is necessary in order to bridge that gap.

Of course, there is much more in Jonathan’s work than that, and it is a must read for those interested in the subject. You can download it here [PDF].

References on Evaluation of Citizen Engagement Initiatives

pic by photosteve101 on flickr

I have been doing some research on works related to the evaluation of citizen engagement initiatives (technology mediated or not). This is far from exhaustive, but I thought it would be worth sharing with those who stop by here. Also, any help with identifying other relevant sources that I may be missing would be greatly appreciated.

Who Participates in Africa? Dispelling the Myth

Whenever discussing participation in Africa (as well as in other developing contexts) the issue of “who participates” often emerges. A constant in these conversations is an assumption that participation in the continent is strongly driven by material and immaterial resources (e.g. money, time). In other words, there seems to be a widespread belief, particularly among development practitioners, that the most privileged sectors of society are disproportionately more likely to participate than the least well-off.

In part, such an assumption is empirically grounded. Since the early 70s, studies have shown inequality in political participation, with the most advantaged groups being disproportionately more likely to participate. Considering that policy preferences between groups differ, unequal participation leads to the troubling possibility that public policy is skewed towards favoring the better off, thus further deepening societal differences and access to public goods and services.

However, often ignored is the fact that most of these studies refer to participation in traditional western democracies, notably the United States and European countries. But do these results hold true when it comes to participation in Africa? This is the question that Ann-Sofie Isaksson (University of Gothenburg) tackles in a paper published in Electoral Studies “Political participation in Africa: The role of individual resources”.

By looking at an Afrobarometer dataset of 27,000 respondents across 20 African countries, Isaksson’s findings challenge the standard thinking on the relationship between resources and participation:

(…) it seems the resource perspective does a poor job at explaining African political participation. If a resource is relevant for meeting the costs of participating, more of that resource should mean more participation. If anything, however, the estimations suggest that having little time (i.e. working full-time) and little money (i.e. being poorer) is associated with more participation.

Isaksson’s analysis is not confined to participation in elections, also examining non-electoral participation, i.e. attending community meetings. With regard to the latter only, there are modest effects associated with exposure to information (e.g. radio, TV, newspapers) and education. Yet, as the author notes, “the result that community attendance is higher among the poor remains”.

To conclude, as underlined by Isaksson in her paper, she is not alone in terms of findings, with other studies looking at Asia and Latin America pointing in a similar direction, slowly dispelling the myth of the role of resources for participation in developing countries. Development practitioners should start to take note of these encouraging facts.

***

P.s.: An earlier ungated version of the paper can be found here [PDF].

Open Data and Citizen Engagement – Disentangling the Relationship

[This is a cross-post from Sunlight Foundation’s series OpenGov Conversations, an ongoing discourse featuring contributions from transparency and accountability researchers and practitioners around the world.]

As asserted by Jeremy Bentham nearly two centuries ago, “[I]n the same proportion as it is desirable for the governed to know the conduct of their governors, is it also important for the governors to know the real wishes of the governed.” Although Bentham’s historical call may come across as obvious to some, it highlights one of the major shortcomings of the current open government movement: while a strong focus is given to mechanisms to let the governed know the conduct of their governors (i.e. transparency), less attention is given to the means by which the governed can express their wishes (i.e. citizen engagement).

But striking a balance between transparency and participation is particularly important if transparency is conceived as a means for accountability. To clarify, let us consider the role transparency (and data) plays in a simplified accountability cycle. As any accountability mechanism built on disclosure principles, it should require a minimal chain of events that can be summarized in the following manner: (1) Data is published; (2) The data published reaches its intended public; (3) Members of the public are able to process the data and react to it; and (4) Public officials respond to the public’s reaction or are sanctioned by the public through institutional means. This simplified path toward accountability highlights the limits of the disclosure of information. Even in the most simplified model of accountability, while essential, the disclosure of data accounts for no more than one-fourth of the accountability process. [Note 1 – see below]

But what are the conditions required to close the accountability cycle? First, once the data is disclosed (1), in order for it to reach its intended public (2), a minimal condition is the presence of info-mediators that can process open data in a minimally enabling environment (e.g. free and pluralistic media). Considering these factors are present, we are still only half way towards accountability. Nevertheless, the remaining steps (3 and 4) cannot be achieved in the absence of citizen engagement, notably electoral and participatory processes.

Beyond Elections

With regard to elections as a means for accountability, citizens may periodically choose to reward or sanction elected officials based on the information that they have received and processed. While this may seem a minor requisite for developed democracies like the US, the problem gains importance for a number of countries where open data platforms have launched but where elections are still a work in progress (in such cases, some research suggests that transparency may even backfire).

But, even if elections are in place, alone they might not suffice. The Brazilian case is illustrative and highlights the limits of representative systems as a means to create sustained interface between governments and citizens. Despite two decades of electoral democracy and unprecedented economic prosperity in the country, citizens suddenly went to the streets to demand an end to corruption, improvement in public services and… increased participation. Politicians, themselves, came to the quick realization that elections are not enough, as recently underlined by former Brazilian President Lula in an op ed at the New York Times “(….) people do not simply wish to vote every four years. They want daily interaction with governments both local and national, and to take part in defining public policies, offering opinions on the decisions that affect them each day.” If transparency and electoral democracy are not enough, citizen engagement remains as the missing link for open and inclusive governments.

Open Data And Citizen Engagement

Within an ecosystem that combines transparency and participation, examining the relationship between the two becomes essential. More specifically, a clearer understanding of the interaction between open data and participatory institutions remains a frontier to be explored. In the following paragraphs I put forward two issues, of many, that I believe should be considered when examining this interaction.

I) Behavior and causal chains

Evan Lieberman and his colleagues conducted an experiment in Kenya that provided parents with information about their children’s schools and how to improve their children’s learning. Nevertheless, to the disillusionment of many, despite efforts to provide parents with access to information, the intervention had no impact on parents’ behavior. Following this rather disappointing finding, the authors proceeded to articulating a causal chain that explores the link between access to information and behavioral change.

The Information-Citizen Action Causal Chain (Lieberman et al. 2013)

While the model put forward by the authors is not perfect, it is a great starting point and it does call attention to the dire need for a clear understanding of the ensemble of mechanisms and factors acting between access to data and citizen action.

II) Embeddedness in participatory arrangements

Another issue that might be worth examination relates to the extent to which open data is purposefully connected to participatory institutions or not. In this respect, much like the notion of targeted transparency, a possible hypothesis would be that open data is fully effective for accountability purposes only when the information produced becomes “embedded” in participatory processes.

This notion of “embeddedness” would call for hard thinking on how different participatory processes can most benefit from open data and its applications (e.g. visualizations, analysis). For example, the use of open data to inform a referendum process is potentially a very different type of use than within participatory budgeting process. Stemming from this reasoning, open data efforts should be increasingly customized to different existing participatory processes, hence increasing their embeddedness in these processes. This would be the case, for instance, when budget data visualization solutions are tailored to inform participatory budgeting meetings, thus creating a clear link between the consumption of that data and the decision-making process that follows.

Granted, information is per se an essential component of good participatory processes, and one can take a more or less intuitive view on which types of information are more suitable for one process or another. However, a more refined knowledge of how to maximize the impact of data in participatory processes is far from achieved and much more work is needed.

R&D For Data-Driven Participation

Coming up with clear hypotheses and testing them is essential if we are to move forward with the ecosystem that brings together open data, participation and accountability. Surely, many organizations working in the open government space are operating with limited resources, squeezing their budgets to keep their operational work going. In this sense, conducting experiments to test hypotheses may appear as a luxury that very few can afford.

Nevertheless, one of the opportunities provided by the use of technologies for civic behavior is that of potentially driving down the costs for experimentation. For instance, online and mobile experiments could play the role of tech-enabled (and affordable) randomized controlled trials, improving our understanding of how open data can be best used to spur collective action. Thinking of the ways in which technology can be used to conduct lowered costs experiments to shed light on behavioral and causal chains is still limited to a small number of people and organizations, and much work is needed on that front.

Yet, it is also important to acknowledge that experiments are not the only source of relevant knowledge. To stick with a simple example, in some cases even an online survey trying to figure out who is accessing data, what data they use, and how they use it may provide us with valuable knowledge about the interaction between open data and citizen action. In any case, however, it may be important that the actors working in that space agree upon a minimal framework that facilitates comparison and incremental learning: the field of technology for accountability desperately needs a more coordinated research agenda.

Citizen Data Platforms?

As more and more players engage in participatory initiatives, there is a significant amount of citizen-generated data being collected, which is important on its own. However, in a similar vein to government data, the potential of citizen data may be further unlocked if openly available to third parties who can learn from it and build upon it. In this respect, it might not be long before we realize the need to have adequate structures and platforms to host this wealth of data that – hopefully – will be increasingly generated around the world. This would entail that not only governments open up their data related to citizen engagement initiatives, but also that other actors working in that field – such as donors and NGOs – do the same. Such structures would also be the means by which lessons generated by experiments and other approaches are widely shared, bringing cumulative knowledge to the field.

However, as we think of future scenarios, we should not lose sight of current challenges and knowledge gaps when it comes to the relationship between citizen engagement and open data. Better disentangling the relationship between the two is the most immediate priority, and a long overdue topic in the open government conversation.

Notes

Note 1: This section of this post is based on arguments previously developed in the article, “The Uncertain Relationship between Open Data and Accountability”.

Note 2: And some evidence seems to confirm that hypothesis. For instance, in a field experiment in Kenya, villagers only responded to information about local spending in development projects when that information was coupled with specific guidance on how to participate in local decision-making processes).

Documentary: Participatory Budgeting in Belo Horizonte

Through the Facebook Participatory Budgeting group I came across a documentary about Belo Horizonte’s PB. The documentary, by Joao Ramos de Almeida, provides a unique view of the functioning of one of the oldest PBs in Brazil.

Among other things, the documentary shows how the process leads to a degree of civic empowerment and activism rarely seen in traditional governing models. It is particularly interesting to see how citizens contest, for instance, the cost estimates of public works made by the city administration. The documentary also shows how PB manages to engage citizens in an extremely time consuming process. It is also interesting to see that, while there is some degree of deliberation in the PB process, much of it is also about negotiation between the different communities involved.

Among other things, it shows that Belo Horizonte’s PB is far from perfect, and the suspicion of some degree of co-optation of some PB participants by the administration highlights difficulties that are inherent to many participatory processes. To some, it might come across as a sobering message. Yet, when looking at participatory initiatives, we should not only compare their functioning to an ideal vision of democracy. In this case, we should also compare it to the status quo, that is, how public budgeting takes place in the absence of public participation.

For those interested in citizen engagement this documentary (English subtitles, 55 mins) is worth watching.

***

Also read

Participatory Budgeting and Digital Democracy: the Belo Horizonte Case

The Effects of Participatory Budgeting on Infant Mortality in Brazil

Participatory Budgeting: Seven Defining Characteristics

Participatory Budgeting and Technology: Innovations in Open Government

The Participatory Turn: Participatory Budgeting Comes to America

Open Government, Feedback Loops, and Semantic Extravaganza

Tom Steinberg recently brought up one of the most important issues for those working at the intersection of technology and governance. It refers to the deficit/surplus of words to describe the “field” (I call it field in the absence of a better word) :

(…) what primary movement or sector is mySociety part of? Or Avaaz? Or Kiva? Or Wikileaks? When I ask myself these questions, no obvious words or names race quickly or clearly to mind. There is a gap – or at best quite a bit of fuzziness – where the labels should go.

This lack of good labels should surprise us because these groups definitely have aims and goals, normally explicit. Also, it is unusual because social and political movements tend to be quite good at developing names and sticking to them.

I personally have witnessed the creation of a number of names, including e-democracy, e-participation, e-governance, government 2.0, and open government. While some may argue that these names are different among themselves, no real consensus exists about what differentiates them. The common denominator is some fuzzy notion that technology may promote more democratic and/or efficient forms of government.

But why the absence of stable terms and the profusion of neologisms? And what are the implications?

The appeal to novelty (argumentum ad novitatem), which asserts that something is superior because of its newness, seems to be one of the reasons behind the constant reinvention of terms. Indeed, adhering to such a logical fallacy might be particularly tempting for the technology community, where new solutions tend to be an improvement over older ones. On top of that, some technological millennialism does not hurt. After all, a constant of humankind is our inclination to think we are living unique moments. Coming up with new names partially fulfils our natural desire to belong to a special moment in history.

But coming up with new terms also allows for “semantic plasticity”, which enables those who use the terms to expand and contract their meanings according to their needs. Take the example of the term “open government data” and its ambiguous meanings: sometimes it is about accountability, sometimes it is about service delivery, other times it is both. Such ambiguity, some might claim, is opportunistic. It creates a larger consumer base that does not only include governments interested in openness as a democratic good, but also less democratically inclined governments who may enjoy the label of “openness” by publishing data that have little to do with accountability. Malleable terms attract larger audiences.

Moreover, new terms (or assigning new meanings to existing ones) also provides additional market entry-points. While it may take 10,000 hours of deliberate practice to become an expert at something, it only takes a few tweets to qualify as a new Gov 2.0 “guru”, an open government “thinker”.

But Tom Steinberg hits the nail on the head when describing why the profusion of names and their terminological inconsistency is problematic:

And this worries me because consistent names help causes to persist over time. If the field of AIDS research had been renamed every 6 months, could it have lasted as it did? Flighty, narrowly used language confuses supporters, prevents focus and is generally the enemy of long term success.

Indeed, the lack of terminological consistency in the field is a major obstacle to cumulative learning. And worse, this problem goes beyond the name for “the field” as a whole, also affecting practices that are part of that very field.

As an illustration, recently some people from the development/opengov worlds have started to unrestrainedly employ the term “feedback loop”. While the understanding around the term (in its latest usage) is imprecise, it normally alludes to an idea of citizen engagement followed by some kind of responsiveness. If there is a reason for the use of the term “feedback loop” in the context of citizen engagement, no serious effort has been made to explain what it is. A term is thus assigned a new meaning to describe things that have been largely studied by others under different names.

I myself haven’t resisted and have used the term a couple of times, but this is not free from implications. For instance, Nathaniel Heller, is a prominent and astute voice in the international Open Government space. Recently, Nathaniel wrote a blog post asking “Is There a Case Against Citizen Feedback Loops”. To date, his post goes unanswered. But had he asked for instance about “the case against (or for) citizen engagement”, I believe a productive conversation could have ensued, based on a couple of thousands of years of knowledge on the matter. But the language defines the audience, and the use of terms like feedback loops reduces the odds of engaging in a conversation with those who hold relevant expertise.

The major problem with this semantic extravaganza relates to the extent to which it blocks the connection with existing knowledge. As new terms come up, the “field” starts, again, to be considered as a new one. And the fact that the majority is unaware of evidence that may exist under other terminology leads to a collective illusion that the evidence does not exist. Then, the “we know very little” sentence starts to be repeated ad nauseam, opening the floodgates to all kinds of half-baked hypotheses (usually masked as “theory of change”) and unbridled calls for “evidence”.

Questions that have been asked in the past, and that have been answered either entirely or partially, re-emerge as if they were new ones. The process of answering these new questions starts again from zero. With neologisms, so dear to those working in “the field”, comes what they claim to despise the most: the re-invention of the wheel.

And these calls for “evidence” are undermined by their very lack of terminological and conceptual consistency – and disinterest in existing knowledge. To further complicate things, researchers and scholars who could potentially debunk the novelty myth may lack incentives to do so, as with the novelty narrative comes the prospect for increased visibility and funding.

But an immediate way out of such a situation seems unlikely. An embargo on the creation of new terms – or assigning new meanings to existing ones – would be neither enforceable nor productive, let alone democratic. Maybe the same would be true for attempting to establish a broad convention around a common vocabulary. But recognition by those working in the field that the individual incentives for such a terminological carnival may be offset by the collective benefits of a more consistent and accurate vocabulary would be a first step.

In the meantime, a minimal willingness to connect with existing knowledge would help a lot, to say the least.

Trust and Political Information: Attitudinal Change in Participants in the Youth Parliament in Brazil

This article analyses the impact of socializing experiences on the political attitudes of youngsters. More specifically, our goal is to evaluate the impact of the Youth Parliament program on youngsters’ confidence levels in the Minas Gerais State Assembly (MGSA). The analysis focuses on the cognitive foundations of attitudes and results show a substantial increase in confidence levels in MGSA, an increase associated with the acquisition of information on the institution. It is asserted that the increase in confidence in MGSA represents and attitudinal “gain”. The study design involves quasi-experimental research on a non-random sample. We conducted two rounds of interviews in 2008, prior and subsequent to the program, with 335 participants (167 in the treatment group; and 168 in the control group).

By Mario Fuks, Gabriel Avila Casalecchi, Brazilian Political Science Review, Vol 6, No 1 (2012)